A team of University of Toronto engineering researchers are working to enhance the reasoning ability of robotic systems, such as autonomous vehicles, with the goal of increasing their reliability and safe operation in changing environments.

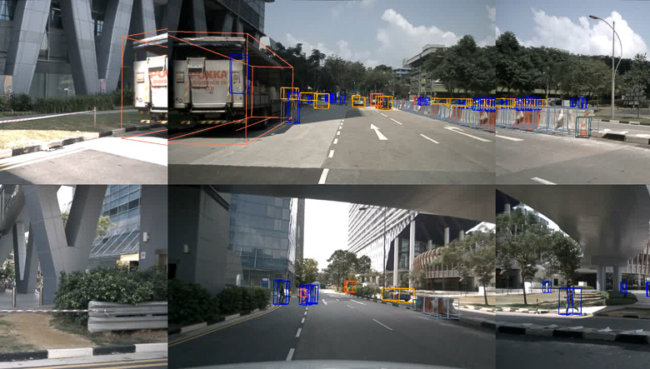

Multi-object tracking, a critical problem in self-driving cars, is a big focus at the Toronto Robotics and AI Laboratory led by Professor Steven Waslander (UTIAS). The process used by robotic systems tracks the position and motion of moving objects, including other vehicles, pedestrians and cyclists, to plan its own path in densely populated areas.

The tracking information is collected from computer vision sensors — 2D camera images and 3D LIDAR scans — and filtered at each time stamp, 10 times a second, to predict the future movement of moving objects.

“Once processed, it allows the robot to develop some reasoning about its environment. For example, there is a human crossing the street at the intersection, or a cyclist changing lanes up ahead,” says Sandro Papais (UTIAS PhD student).

“At each time stamp, the robot’s software tries to link the current detections with objects it saw in the past, but it can only go back so far in time.”

In a new paper presented at the 2024 International Conference on Robotics and Automation (ICRA) in Yokohama, Japan, Papais and his co-authors Robert Ren (Year 3 EngSci) and Waslander introduce Sliding Window Tracker (SWTrack). The graph-based optimization method uses additional temporal information to prevent missed objects and improve the performance of tracking methods, especially when objects are occluded in the current viewpoint.

“My current research direction expands robot reasoning by improving its memory capabilities,” says Papais.

“SWTrack widens how far into the past a robot considers when planning. So instead of being limited by what it just saw one frame ago and what is happening now, it can look over the past five seconds and then try to reason through all the different things it has seen.”

The team tested, trained and validated their algorithm on datasets gathered from vehicles operating in the field using nuScenes, a public large-scale dataset for autonomous driving vehicles. These cars have been across many different roads, in cities around the world, and the data includes human annotations that the team was able to use to benchmark the performance of SWTrack.

They found that each time they extended the temporal window, to a maximum of five seconds, the tracking performance got better. But past five seconds, the algorithm’s performance was slowed down by computation time.

“Most tracking algorithms would have a tough time reasoning over some of these temporal gaps. But in our case, we were able to validate that we can track over these longer periods of time and maintain more consistent tracking for dynamic objects around us,” says Papais.

Waslander’s lab group also presented a second paper on the multi-object tracking problem at ICRA, co-authored by Chang Won (John) Lee (EngSci 2T2 + PEY, UTIAS MASc student).

The paper presents UncertaintyTrack, a collection of extensions for 2D tracking-by-detection methods to leverage probabilistic object detection in multi-object tracking.

“Probabilistic object detection quantifies the uncertainty estimates of object detection,” says Lee. “The key thing here is that for safety-critical tasks, you want to be able to know when the predicted detections are likely to cause errors in downstream tasks such as multi-object tracking. These errors can occur because of low-lighting conditions or heavy object occlusion.

“Uncertainty estimates give us an idea of when the model is in doubt, that is, when it is highly likely to give errors in predictions. But there’s this gap because probabilistic object detectors aren’t currently used in multi-tracking object tracking.”

Lee worked on this paper as part of his engineering science undergraduate thesis. He is now a master’s student in Waslander’s lab, researching visual anomaly detection for the Canadarm3.

“In my current research, we are aiming to come up with a deep-learning-based method that detects objects floating in space that pose a potential risk to the robotic arm.

But before Lee began pursuing robotics research, he was a member of aUToronto, U of T’s self-driving car team, which competes annually at the SAE AutoDrive Challenge. Lee was part of the 3D object detection sub-team and later the multi-object tracking sub-team.

“Through aUToronto, I got involved with Professor Waslander’s lab because he’s a faculty advisor for the team,” Lee says. “I reached out to him as my thesis advisor and started doing literature review of his previous work.

“I saw that he was doing active research on multi-object tracking and had previous papers in probabilistic object detection. I saw a potential connection between probabilistic object detection and his current multi-object tracking research, which gave me the idea of using localization estimates in object tracking.”

Papais was also a past member of the aUToronto team, working on the perception and detection sub-teams.

“We had a lot of challenges with the temporal filtering of information during my time at aUToronto,” Papais says.

“The team had strong detection performance, but we found our challenge was in processing these detections and tracking and filtering objects across time. There were imperfections and noisy detections; objects you might have missed or objects that come up as false positives because the vehicles think there’s something there but there isn’t.

“I saw a lot of these errors firsthand at competition too and it drove a lot of my inspiration and thinking about my research and its potential impact.”

Papais is looking forward to building on this idea of improving robot memory and extending it to other parts of the perception for robotics infrastructure.

“This is just the beginning,” says Papais. “We’re working on the tracking problem, but also other robot problems, where we can incorporate more temporal information to enhance perception and robotic reasoning.”

“TRAIL has been working on assessing perception uncertainty and expanding temporal reasoning for robotics for multiple years now, as they are the key roadblocks to deploying robots in the open world more broadly,” says Waslander.

“We desperately need AI methods that can understand the persistence of objects over time, and ones that are aware of their own limitations and will stop and reason when something new or unexpected appears in their path. This is what our research aims to do.”