From the outer reaches of space to the inside of the human body, robots extend our reach into places we otherwise couldn’t go. Today, improvements in sensor technology and control algorithms are bringing robots into many aspects of our daily lives, including factory floors, hospital rooms and the air above our cities.

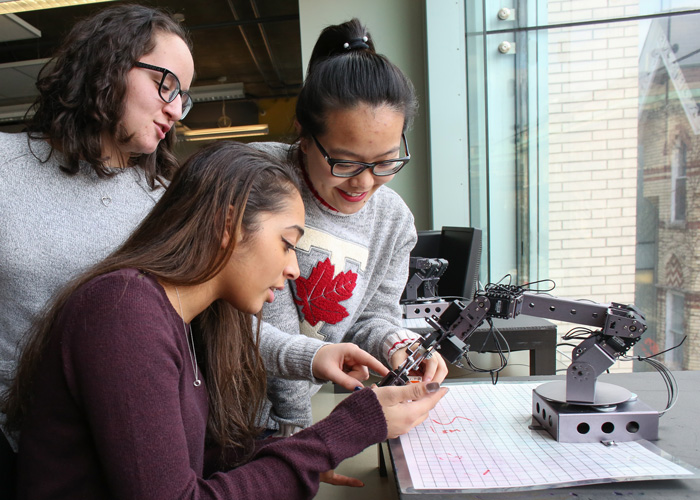

U of T Engineering hosts a robust suite of educational and research programs in robotics. These include our undergraduate minor in Robotics and Mechatronics, our Engineering Science Major in Robotics Engineering and our MEng emphasis in Robotics and Mechatronics.

Through ground-breaking research, our students and professors are blazing a path to new applications in exploration, medicine and advanced manufacturing. Examples include:

Autonomous field robotics

Professor Tim Barfoot (UTIAS) heads the Autonomous Space Robotics Laboratory at the University of Toronto Institute for Aerospace Studies. In addition to a number of self-driving vehicles (on road and off), the team’s suite of robots includes the Tethered Robotic Explorer (TReX), which can rappel down nearly vertical surfaces and could be used for applications such as inspecting power dams for defects.

Watch TReX in action in a gravel pit in Sudbury

Barfoot and his team have developed an innovative technique known as “Visual Teach and Repeat.” This involves a human operator driving the robot along a route he or she knows to be safe, while the robot logs the visual information associated with that route. By comparing its own location to that of the previously taught route, the robot can adjust its actions to stay on the right path despite extreme changes in lighting and weather.

Watch highlights from a test of the Visual Teach and Repeat technique

A similar approach can also be used with unpiloted aerial vehicles (UAVs, also known as drones). Barfoot and Professor Angela Schoellig (UTIAS) are collaborating with Drone Delivery Canada on a system to enable UAVs to navigate using visual signals rather than data from GPS satellites. They’ve demonstrated the method in field trials at Canadian Forces Bases in Suffield and Montreal.

Barfoot is also working with Schoellig as a faculty advisor for aUToronto, U of T Engineering’s entry in the AutoDrive Challenge™. The team came out on top at the first competition in the three-year challenge. “We’re aggressively preparing for year two now,” says Barfoot. “I’m blown away by the sophistication of this team and can’t wait to see what comes next.”

Robotic cell surgery

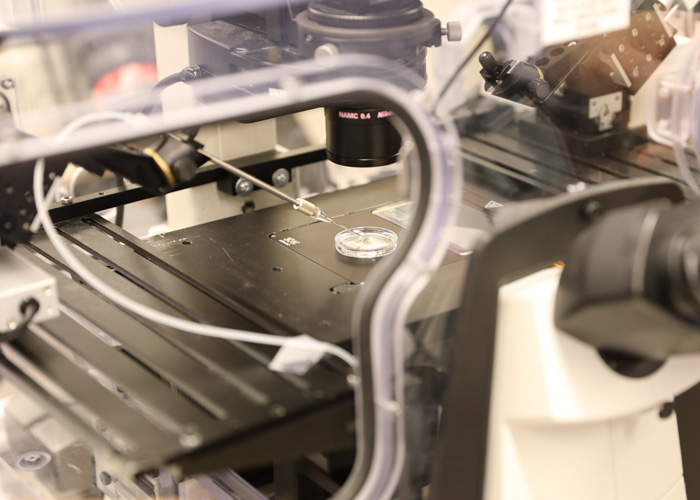

Professor Yu Sun (MIE) holds the Canada Research Chair in Micro and Nanoengineering Systems. He and his team produce robots with the ability to sense and manipulate individual cells, as well as even smaller structures such as chromosomes and proteins.

In collaboration with Dr. Robert Casper at Mount Sinai Hospital , Sun and his group developed the first robotic cell surgery system for manipulating individual sperm and egg cells for in vitro fertilization treatment.

Where previous systems relied on human hands or manipulators controlled by joysticks, the new system enables robotic control. This enhances the accuracy and consistency of movements, while also allowing for remote operation. They tested the ability of the system to inject sperm into eggs in clinical trials, demonstrating the first robotically-assisted human fertilization. In collaboration with Dr. Cliff Librach at the CReATe Fertility Centre and Dr. Keith Jarvi at Mount Sinai Hospital, Sun and his team also showed that their new system is capable of optimal sperm selection for treatment use.

More recently, the team developed a robotic tool that can quickly inject a fluorescent dye into individual heart cells grown in a 96-well plate. The dye is used to measure a quality known as the gap junction function, which is impaired in patients with certain heart conditions.

Injecting the dye by hand is time-consuming, but using the robot allows for thousands of cells to be tested at once. This opens the door to high-throughput screens — large numbers of drugs can be tested on heart cells isolated from an individual patient to determine which ones would be most effective at improving the gap junction function. Sun’s team is collaborating with Dr. Robert Hamilton and Dr. Jason Maynes at The Hospital for Sick Children, to screen libraries of drug molecules for specific patients, an example of personalized medicine.

“Robotic systems are now being deployed in hospitals for tissue-level surgery, but performing surgery at the cell level is full of new challenges,” says Sun. “The robots we are developing could open up entirely new strategies for treatment and personalized medicine.”

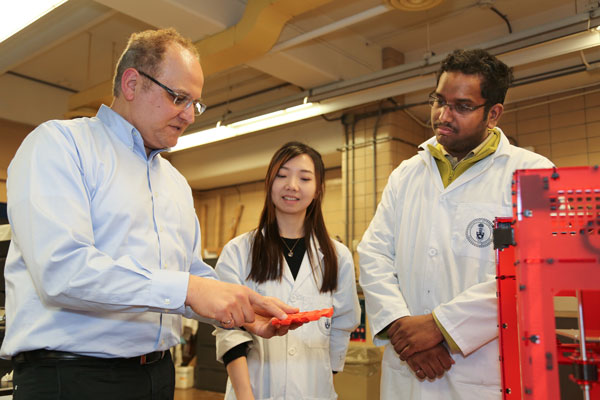

Factory of the future

The manufacturing industry was one of the first to implement robotics on a wide scale. But as with other domains, new technologies and economic pressures are leading to a shift in how robots are used on the factory floor.

Professor Hani Naguib (MIE) his colleagues are developing the next generation of manufacturing robots. One technique they are leveraging is called smart sensing. This involves adding sensors across the factory floor, including wearable sensors to track how operators are behaving or CCD cameras that keep track of the actions of robots. Data collected by these sensors can be used in combination with artificial intelligence algorithms to identify ways in which efficiency can be improved.

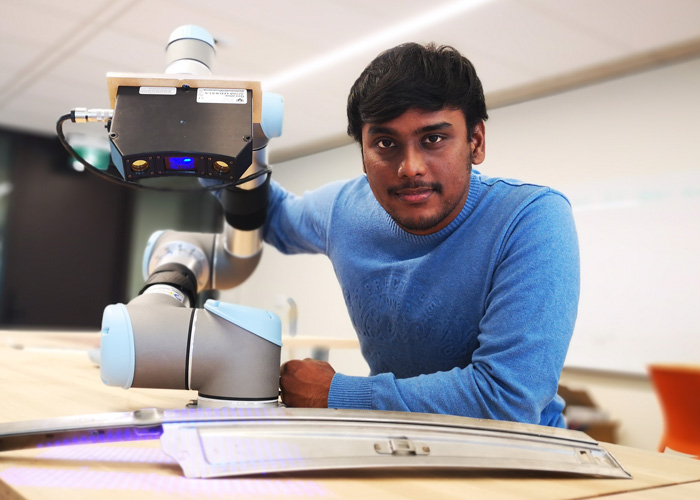

To improve factory robots, Sun’s lab have a close collaboration with KIRCHHOFF Automotive North America. They are developing robotic systems and 3D computer vision algorithms for auto part inspection. The robotic system is capable of establishing highly accurate point clouds — a computer representation of a physical object — for auto parts. By transforming these physical objects into data, the system enables AI algorithms to identify defects and improve quality control more quickly and accurately than human operators.

“Robots have always been part of the manufacturing industry,” says Naguib. “Our task is to make the interaction between these robots and the humans that work alongside them more seamless. We should be able to communicate with them as easily as we communicate with each other.”